Automated science as a vision for AI

Human intelligence is a misleading vision for AI. It’s bloatware, and bloatware can kill (or at least seriously delay) even the most determined projects.

The intelligence we need to create in machines isn’t a natural phenomenon, it’s a human invention. The goal isn’t artificial human intelligence, it’s automated scientific discovery.

The bloatware of intelligence

What is intelligence? It’s an inherently controversial and bloated question. Here are 70 definitions of intelligence, and this list barely scratches the surface. But whatever your working definition, the human variety of general intelligence is indisputably our best example.

As such, conventional wisdom holds that the only reasonable archetype for artificial intelligence is human. But is it true?

“99 percent of human qualities and abilities are simply redundant” — Yuval Noah Harari

Experts would dispute this popular notion. Norvig and Stuart highlight that thinking and acting humanly is but one slice of AI; rationality is a peer level consideration. Clearly, we don’t need to reverse engineer every human instinct and product of our natural intelligence. As Yuval Noah Harari explains, “AI is nowhere near human-like existence, but 99 percent of human qualities and abilities are simply redundant for the performance of most modern jobs.”

So if human intelligence is bloatware, why is it such an enduring vision for AI? One explanation is its stickiness. Abstractions like rational agents can’t compete with the concreteness of artificial humans to inspire and motivate our efforts. Movies about androids are blockbusters; it’s hard to even recall movies about rationality.

Another explanation might simply be our tendency to imagine new technologies in the frame of old. Cars weren’t cars at first, they were horseless carriages. Telephones were speech-enabled telegraphs. In this context, the idea of AI as artificial human intelligence is certainly forgivable.

Forgivable, but misguided. Human intelligence is bloatware, and bloatware can kill (or at least seriously delay) even the most determined projects.

Knowledge creating machines

So if human intelligence is misleading, then what is our guide? The answer is illuminated, not with the question of what is artificial intelligence, but rather why?

“What society most needs is automated scientific discovery.” — Gary Marcus

In essence, we need AI to deliver inventive solutions to our problems, the means for creating new knowledge. Moreover, we want machines that create good knowledge, effective explanations of how to change the world.

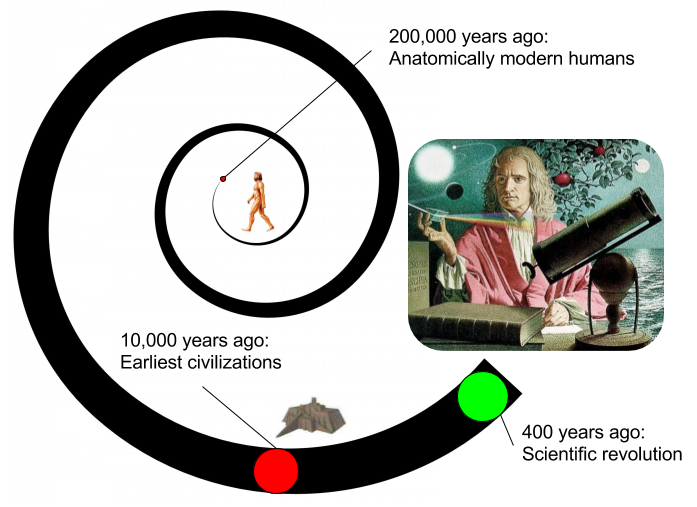

Before you retreat to the comfort of human intelligence, recall that humans are notoriously flawed knowledge creators. Anatomically modern humans have existed for 200,000 years.

We wouldn’t model flight using a bird that failed to fly at such a spectacular rate. As a model for machine intelligence, shouldn’t humans be subject to the same standard of criticism?

Yet it’s only within the past few centuries, beginning with the scientific revolution, that humans began making consistent, predictable progress through the creation of good knowledge. Earlier humans produced a wealth of bad knowledge, most of it long forgotten.

This isn’t to say humans were incapable of producing good knowledge. The point is that good knowledge creation was exceedingly rare. We wouldn’t model flight using a bird that failed to fly at such a spectacular rate. As a model for machine intelligence, shouldn’t humans be subject to the same standard of criticism?

The invention of scientific knowledge

We can further hone our expectations for good knowledge to scientificdisciplines. According to Gary Marcus, “What society most needs is automated scientific discovery.” Demis Hassabis holds similar ambitions. “I’ve always hoped that A.I. could help us discover completely new ideas in complex scientific domains.”

Only scientific progress embodies the sort of revolutionary knowledge creation that we imagine for our machines.

We expect machines to embody superhuman intelligence. Only scientific progress embodies the sort of revolutionary knowledge creation that we imagine for our machines. It’s knowledge that arrives in conjectural leaps, defies our past experiences, and redefines what’s possible.

This process of knowledge creation is a human invention, not a natural phenomenon. Yet on closer inspection, our knowledge of how scientific knowledge is created is younger still! It was only in the 20th century, with Karl Popper’s philosophy of science, that there emerged a strong consensus of how scientific knowledge is created.

Naturally irrational humans are deeply flawed knowledge creation machines. We’ve only recently acquired the skills we need from machines and our knowledge of how we do it has not been broadly disseminated. Nature doesn’t provide a model of what we want from intelligent machines, namely revolutionary scientific knowledge, nor is the process that humans use to create this knowledge a naturally occurring phenomenon.

If we have machines that generate revolutionary scientific knowledge, problem-solvers that move in conjectural leaps, then every other vista is accessible.

Isn’t a deep understanding of human intelligence prerequisite knowledge? Aren’t the most foundational ideas of neural networks and deep learning derived from this roadmap? And if not, what are the alternatives?

In my next post, I’ll examine the arguments behind the human intelligence roadmap and why it should be treated with skepticism. I’ll also surface the key question for evaluating the merits of your own roadmap.