Breaking the illusion of data-driven AI

Observe data in the wild of cutting-edge applications and its power dissipates, revealing a fresh perspective on how to compete.

According to Sam Harris, the self disappears. The I, the feeling of being a subject, vanishes when you concentrate on it. It’s a jarring empirical claim, an affront to obviousness, that something as primordial as the self can evaporate under scrutiny.

Data is to artificial intelligence as the self is to consciousness. Algorithms and architectures, like consciousness, seem inscrutable. But data just is, a fungible resource like oil, electricity, or money. For many, data is the I in AI, the source of knowledge and intelligence, the stuff that makes the whole thing go.

“What does not survive scrutiny cannot be real.” Sam Harris

Perhaps we should just embrace the illusion. When asked, “Why should a relatively rare — and deliberately cultivated — experience of no-self trump this almost constant feeling of a self?” Harris offers, “Because what does not survive scrutiny cannot be real.” We make an effort to see past the illusion because reality matters. A clear view of reality is empowering.

The true nature of data

For Harris, the self “is what it feels like to be thinking without knowing that you are thinking.” Similarly with data. Observations are what it feels like to observe. We mistakenly imbue data and observations with the attributes of knowledge, while the intelligence that produces observations labors in obscurity.

“The world doesn’t tell us what is relevant. Instead, it responds to questions.” Teppo Felin

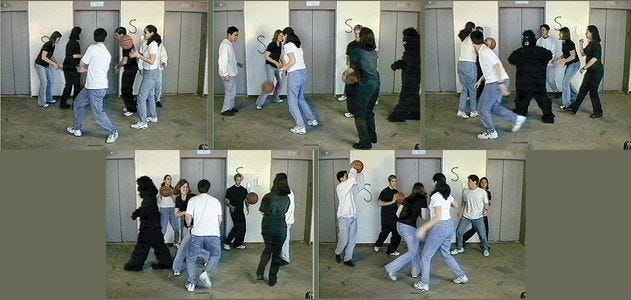

When data “speaks to us” or “surprises us,” it’s only against the backdrop of our expectations, our explanations. In his excellent essay on the fallacy of obviousness, Teppo Felin makes this argument vivid. He recalls a famous psychology experiment where the participants fail to see a gorilla in their midst. But they only fail to see the gorilla, among many other aspects, because they’re too busy counting how many times the players pass the basketball.

Felin walks us through a gallery of compelling observations about observations, marshalling testimonies from Charles Darwin, Albert Einstein, Arthur Conan Doyle, Karl Popper, and many others. Felin summarizes, “The world doesn’t tell us what is relevant. Instead, it responds to questions. When looking and observing, we are usually directed toward something, toward answering specific questions or satisfying some curiosities or problems.”

And yet, this defining quality of theory-driven data is frequently overlooked. Felin concludes, “The present AI orthodoxy neglects the question- and theory-driven nature of observation and perception.”

Why the illusion persists

Of course, like the self, the illusion of data persists because it’s useful. This milk-bland characterization makes data easy to talk about, a natural figurehead in AI. It welcomes technologists, managers, and policy wonks alike. It scales from cocktail parties to political movements. Data, like information, must be free!

It persists because it’s inherently optimistic. If knowledge springs from data like water from a glacial spring, we merely have to tap it and let the new knowledge flow. More data, more knowledge. Much of the hyperbole in AI is carried by this illusion.

It’s hard to relinquish something so intuitive, so grand in its promises. But illusions impose a debt, a drag on progress. They mislead legislators to one-size-fits-all-fungible-data policies. They delude organizations to inaction, in the belief that data brings impenetrable competitive advantages. And most seriously, they distract technologists from the processes of observing and error-correction that lead to inspired solutions.

Here, I want to concentrate on data. I want to set aside the notion that data is a fungible resource. I want to observe data in the service of specific applications. If only momentarily, I want this popular illusion of data to vanish like the gorilla in our midst. In the process, I hope to illuminate where the competitive advantages actually reside.

Observing data in the wild

The examples that follow are drawn from medicine. For the most part, the optimism for AI in medicine is carried by new data. The unstructured data buried in medical records. The newfound quantification of biological systems in omics data. Novel algorithms that derive imaging data from old technologies. The integration of various population and environmental data sources, such as nutrition, lifestyle factors, and demographics. Viewed at a distance, this immense data landscape is breathtaking.

But zoom in and you’ll find these springs of new data hide a secret. Existing knowledge provides the essential observational context that makes the data useful. And this muddies the water considerably.

A few examples illustrate these contingencies.

Image synthesis

Let’s start with a particularly dramatic example of new data in medical imaging. Medical imaging seeks out abnormal scans, which by definition, are rare. To address this data sparsity problem, researchers are using image synthesis, generative models to create artificial medical images (see video below). According to Hoo-Chang Shin of NVIDIA and his colleagues, “Reflecting the general trend of the machine learning community, the use of GANs [generative adversarial networks] in medical imaging has increased dramatically in the last year.”

To create this synthetic data, traditional data augmentation techniques of image manipulation are insufficient. New techniques leverage background medical knowledge, such as the neural anatomy of the brain and the expected characteristics of tumors. Explanations provide the essential catalyst for new observations.

Bias in health records

Health records are another frequently cited spring of new data, just waiting to be plumbed. Harvard’s Denis Agniel and his colleagues disabuse us of this idea. They describe the inherent biases in electronic health records (EHR). “EHR data, without consideration to context, can easily lead to biases or nonsensical findings, making it unsuitable for many research questions.”

The authors maintain that only by explicitly modeling the processes that make the data so complex can new insights may be gleaned. Medical knowledge such as patient pathophysiology and healthcare process variables provides the essential context. Without the guiding influence of explanations, data misleads.

Polygenic risk assessments

Even seemingly objective sources such as genetic data are deeply entwined in the broader medical complex. In Nature, Matthew Warren recently reviewed polygenic risk assessments, calling it one of the most promising and controversial developments in predictive medicine. “Polygenic scores add together the small — sometimes infinitesimal — contributions of tens to millions of spots on the genome, to create some of the most powerful genetic diagnostics to date.”

Concerns of algorithmic bias are widespread. But more relevant to our discussion is the deep dependence on existing medical knowledge. “Without understanding the biological differences represented by the score — or the environmental and social factors bound to interact with those differences — it’s impossible to know how to intervene.” Explanations bar the path to effective interventions.

Mendelian randomization

Another particularly grand example of data-driven thinking is Mendelian randomization (see video below), where genetics is used to mimic clinical trials. As Gary Taubes reports, researchers use naturally occurring genetic differences to run virtual experiments across medicine, social science, psychology and economics. For example, gene variants that raise the level of “good” HDL cholesterol in a person’s bloodstream can be used as a basis for comparing rates of heart attacks across populations. Since virtual experiments may be conducted without collecting any new data, the result is “an explosion of studies.”

While explosions of new studies, like explosions of data, are universally cast in optimistic terms, the pioneers of Mendelian randomization “now seem as worried about how the technique will be misused as they are excited about its promise.” While Mendelian randomization can illuminate the lifelong impact of genes, it can’t determine whether treatments tied to that information will positively affect patients in the present. To be useful, data must be situated within much broader explanatory frameworks.

In reviewing these examples, you’re undoubtedly struck by the variety and volume of the data. But what animates it, what gives it life? Data is acquired based on expectations of what’s needed and what’s ignored. Data is generated based on explanations of how the world works. Data is interpreted and applied within an edifice of supporting knowledge.

The power of data dissipates in the light of explanations. AI dramatically amplifies our observational capacity, much like telescopes amplify our view of the cosmos. But explanations dictate where the telescope should point, and explanations make sense of what we find.

Data’s last stand

Ultimately, we retreat to data’s last stand: Data must be good. Emptied of explanations, data is a husk. And so we continually add new qualifiers to prop up the illusion. Data is in the volume, the variety, the velocity, the variability, the veracity, and on and on. We do this because fungible data lacks meaning. We do this because fungible data is such an affront to reality.

Megan Beck and Barry Libert describe a jarring realization that travels with data-driven thinking. “Companies racing to simultaneously define and implement machine learning are finding, to their surprise, that implementing the algorithms used to make machines intelligent about a data set or problem is the easy part.”

So where does the value lie? In good data. “What’s not becoming commoditized, though, is data. Instead, data is emerging as the key differentiator in the machine learning race. This is because good data is uncommon.” The world spews data incessantly, but good data is uncommon.

Beck and Libert add this critical remark: organizations are inattentive to explanations. “The step that many organizations omit is creating a hypothesis about what matters.” They miss the gorilla in their midst because they’re too busy counting basketballs.

A new perspective

When we shift our perspective to explanations, it becomes clear why good data is uncommon. Good data is uncommon because good explanations are uncommon. Explanations are the rare stuff that makes the whole thing go.

Data is useless without an intelligent observing process, a process begins before the AI is built and continues long after the AI’s work is finished. This knowledge pipeline depends on people, who contribute all the creative elements that are most associated with intelligence. This is the real source of enduring competitive advantage. And unlike data, creativity is a resource that’s hard to monopolize.

When the illusion of data is broken, perspective shifts and opportunities sharpen. Unlike oil, data no longer seems a scarce resource. The world produces an overwhelming amount of it. Even old data, continuously mined for insights, is revealed as a renewable resource. It’s renewed by fresh perspectives and new explanations.

Like consciousness, these things are far from understood. We really have no idea how conjectural knowledge is created. It’s the creative block that stands between us and artificial general intelligence. But putting illusions in its place, like the idea that knowledge is derived from data, gets us no closer.

Concentrating on the nature of explanations just might.

Agniel, D., Kohane, I., Weber, G. (2018). Biases in electronic health record data due to processes within the healthcare system: retrospective observational study. BMJ. https://doi.org/10.1136/bmj.k1479

Beck, M. & Libert, B. (2018). The Machine Learning Race Is Really a Data Race. MIT Sloan Management Review. https://sloanreview.mit.edu/article/the-machine-learning-race-is-really-a-data-race/

Felin. T. (2018). The fallacy of obviousness. Aeon. https://aeon.co/essays/are-humans-really-blind-to-the-gorilla-on-the-basketball-court

Shin, HC et al. (2018). Medical Image Synthesis for Data Augmentation and Anonymization using Generative Adversarial Networks. https://arxiv.org/abs/1807.10225

Taubes, G. (2018). Researchers find a way to mimic clinical trials using genetics. MIT Technology Review. https://www.technologyreview.com/s/611713/researchers-find-way-to-mimic-clinical-trials-using-genetics/

Warren, Matthew. (2018). The approach to predictive medicine that is taking genomics research by storm. Nature. https://doi.org/10.1038/d41586-018-06956-3